Key AI/ML algorithms of different categories with & industry use cases & common examples.

Explore the key AI/ML learning types—Supervised, Unsupervised, Semi-Supervised, Reinforcement, and Self-Supervised Learning—highlighting their unique methodologies and applications in diverse industries.

AI/ML systems are mainly categorized into four types based on training methods:

· Supervised Learning

· Unsupervised Learning

· Semi-Supervised Learning

· Reinforcement Learning

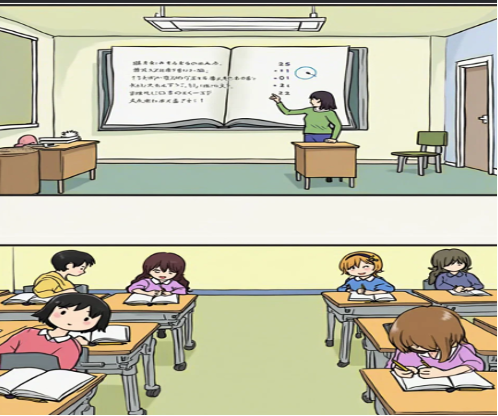

1. Supervised Learning: It uses labelled datasets to train models, like learning from a textbook with answers, validating predictions against known outcomes through statistical techniques.

Regression: It models the relationship between a dependent variable (outcome) and independent variables (features). It predicts or estimates the outcome based on input values.

· Linear Regression: Assumes a linear relationship between the outcome and features. Solves for predicted values (e.g., sales volume).

· Logistic Regression: A binary or multi-class classifier predicting outcomes like "Yes/No" (e.g., customer churn prediction).

Classification: It categorizes data points into predefined classes or groups, either binary (using sigmoid) or multiclass (using SoftMax).

- KNN (K-Nearest Neighbours): Classifies data based on most of its K-nearest neighbours in the feature space. This model answers “ Which category does this data point belong to?”

E.g. Movie genre recommendation based on user preferences (e.g., horror, thriller).

· Decision Tree/ Random Forest: answers “ Which category does this data point belong to ?”

· Decision Tree splits data into categories using decision rules (e.g., Is it A, B, or C?).

· Random Forest is an ensemble of decision trees, optimizing accuracy and minimizing overfitting.

E.g.: Predicting heart failure risk or detecting fraudulent transactions

· Support Vector Machine (SVM): It answers, “ How do you separate data points into distinct classes ?”

· SVM finds the optimal boundary (hyperplane) by separating two classes in a high-dimensional space using support vectors to define the separation line.

E.g.: Image classification for prostate cancer detection using protein biomarkers.

Supervised Neural Networks (ANN/Deep Learning): Trained with labelled input-output pairs to learn mappings from inputs to outputs.

Classification Issues: Predict discrete categories ( an application risky / not risky ) using Fully connected Neural Network (FCNN)/ Convolutional Neural network) (CNN)for image classification

Regression Issues: Predict continuous values ( housing price, stock market etc ) using Fully connected Neural Network (FCNN) with linear activation at the output layer.

Timeseries issue: Predict a sequence such as ( forecasting weather, traffic, seasonal sales etc ) using Recurrent Neural Network (RNN), Long Short-Term Memory (LSTM)

Computer Vision: Identify & locate objects in an image using Convolutional neural network (CNN), Region based CNN(R-CNNs), YOLO Vx (You Only Look Once)

Natural Language Processing: Developing Chatbot, Sentiment analysis, language translation & interpretation etc, using transformer-based architectures such as BERT, GPT etc.

2. Unsupervised Learning:

It trains models without labelled data, identifying patterns based on natural affinities rather than predefined categories. It's like grouping attendees at an event based on their interests, without explicit labels, but guided by inherent tendencies.

Clustering: This category of algorithm groups datapoints based on similarity.

K-Means Clustering groups data points by minimizing distance to centroids, iteratively adjusting clusters and centroids. It helps identify distinct groups in a dataset.

Use case: Identifying customer segments for targeted campaigns.

Hierarchical Clustering creates a tree of clusters, showing the hierarchical relationships between similar data points.

Use case: Grouping similar topics in a book or related news articles over the past year.

DBSCAN groups data based on density and detects outliers.

Use case: Identifying clusters in geospatial data and pinpointing one-off occurrences.

Dimensionality Reduction helps remove irrelevant features that add noise, reducing model complexity and improving efficiency by retaining only the most impactful attributes.

Principal Component Analysis (PCA) transforms correlated features into uncorrelated components, ranking them by importance to identify key features.

t-SNE reduces complex, unlabelled data into 2D or 3D plots, revealing patterns and structural organization to uncover hidden insights.

Autoencoders compress data into a latent space and reconstruct it, eliminating irrelevant dimensions to highlight the essential features.

Association Learning identifies patterns and associations between data points, helping to group related features.

Apriori finds frequent item sets and generates association rules, helping identify items that can be bundled together.

FP-Growth efficiently identifies item bundles in large datasets, like Apriori but faster.

Eclat identifies item bundles in Market Basket Analysis using a different approach for efficient results.

Anomaly Detection: This category of learning tries to identify rare patterns or outliers in the data.

Isolation Forest detects outliers by isolating rare data points using an ensemble of decision trees, ideal for fraud detection.

One-Class SVM detects anomalies by training on a single class, identifying if new data points belong to that class or are outliers.

3. Semi-supervised Learning: Semi-supervised learning (SSL) combines a small amount of labelled data with a larger unlabelled dataset, improving model performance when labelling is costly or time-consuming.

The combination of supervised and unsupervised learning blends the strengths of both approaches. It uses a small, labelled data set with a larger unlabelled dataset, ideal for costly or impractical model training. This method is widely used in speech recognition, photo tagging, and medical imaging, where labelling large datasets (e.g., CT scans or MRIs) is expensive and time-consuming.

- Model Initialization: Train a baseline model using supervised learning/classification.

- Pseudo Labelling: Use the trained model to predict labels for unlabelled data. Add high-confidence predictions to the training set.

- Iterative Refinement: Retrain the model with labelled + pseudo-labelled data and evaluate accuracy. Repeat until the desired accuracy is reached.

- Fine Tuning: Fine-tune the model with labelled data and validate performance.

Example: Google’s DeepMind uses SSL to diagnose eye diseases from OCT scans with minimal labelled data.

4. Reinforcement Learning: A reward-based learning approach where the learner observes, acts, receives feedback, adjusts actions, updates policies, and iterates until achieving the optimal policy.

Model-Free Algorithm (Q-learning): A reinforcement learning approach where the agent learns the optimal policy from experience, using states, actions, rewards, and Q-values to maximize future rewards.

Variants: Deep Q Learning (DQN), SARSA

Applications: Healthcare, Robotics, Gaming

Types:

· Policy-Based: Directly learns the policy (e.g., PPO in Robotics)

· Value-Based: Estimates reward function to derive policy (e.g., Q-learning, DQN in gaming)

· Actor-Critic: Combines policy and value methods for balanced learning efficiency (e.g., A2C)

Model-Based Algorithm: Agent builds an environment model to predict future states and rewards, enabling planning without constant interaction.

e.g.: AlphaZero uses Monte Carlo Tree Search (MCTS) with a learned model.

Applications: Robotics, Autonomous Driving

On- &-Off Policy RL On-Policy RL involves learning a policy while following it, ensuring safer exploration of policies close to the current one, like on-the-job training (e.g., SARSA). Off-Policy RL learns from exploratory or random policies and leverages past experiences for efficiency, akin to human learning from the past (e.g., Q Learning, DQN, DDPG).

Other RL: Hierarchical RL (HRL) breaks tasks into subtasks with individual policies, used in robotics and gaming. Multi-Agent RL (MARL) involves agents interacting in competitive or cooperative gaming. Inverse RL (IRL) learns rewards by observing behavior, used in humanoid or robot training. Distributional RL (DRL) focuses on multi-agent interactions in gaming.

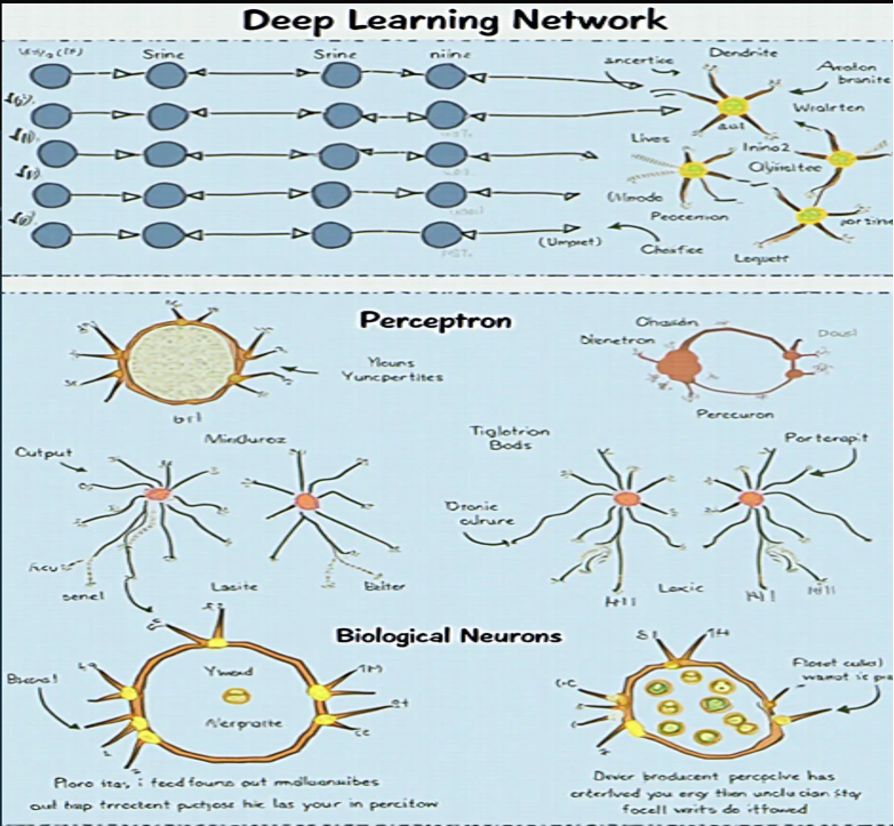

5. Deep Learning:

A subset of ML that uses Artificial Neural Networks (ANN) with multiple layers (Multi-level Perceptron) to simulate human-like deep cognition. It adapts learning architecture based on data patterns, layers, weights, and biases for specific use cases.

Convolutional Neural Networks (CNN): Uses convolutional layers to process images and extract spatial features.

Application:

Image Classification: Classifies images (e.g., Cats vs Dogs, Snakes vs Eels).

Object Detection: Identifies objects in images (e.g., face detection).

Medical Imaging: Detects anomalies (e.g., tumours in X-rays, MRIs, CT scans)

e.g. Res-Net achieved high accuracy in ImageNet classification, categorizing millions of images.

Recurrent Neural Networks (RNN): Utilizes sequential data patterns and time-based dependencies.

Variants: LSTM (Long Short-Term Memory) and GRU (Gated Recurrent Units) address vanishing gradient issues.

Applications:

Time Series Forecasting: Predicts future trends (e.g., stock prices, seasonal sales).

Natural Language Processing (NLP): Tasks like text generation, sentiment analysis, and machine translation.

Speech Recognition: Converts speech to text.

e.g. Google Translate uses RNN for multi-language translation.

Transformers: Leverage attention mechanisms for parallel processing of sequences, excelling in NLP tasks.

Applications:

Chatbots and Virtual Assistants: Power conversational AI (e.g., ChatGPT).

Text Summarization: Condenses large documents while retaining context.

Search Engine/ Page Ranking: Ranks results/pages contextually.

e.g. ChatGPT powers applications from coding assistance to content generation.

Generative Adversarial Networks: It uses two competing neural networks: a generator and a discriminator.

Applications:

Image Generation: Creating realistic face or body images. Or any other kind of images.

Data Augmentation: Generating additional training data for ML models.

Style Transfer: Converting images into artistic styles.

e.g. DeepFake Technology uses GANs to generate realistic fake videos.

Deep Believe Networks (DBN): Generative models with stacked restricted Boltzmann Machines (unsupervised category of Deep Learning models).

Applications:

Feature Learning: Pre-training to enhance neural network performance.

Healthcare: Patient data modelling for predictive analytics

e.g. Handwritten digit recognition on the MNIST dataset.

Deep Reinforcement Learning: Combines Deep Neural Networks with Reinforcement Learning techniques.

Application:

Gaming: Autonomous Video games using AI agent.

Industrial Automation: Manufacturing process optimization.

Personalized Learning: Tailored content generation for online education systems.

e.g., OpenAI Five defeating professional Dota 2 players.

Many more algorithms exist, each deserving deeper exploration. While Reinforcement and Deep Learning warrant dedicated discussions, this overview highlights key ML algorithms for a quick yet impactful understanding.

Leave a comment

Your email address will not be published. Required fields are marked *